SoloFinger

Aditya, Adwait, bruno

(CHI 2021)

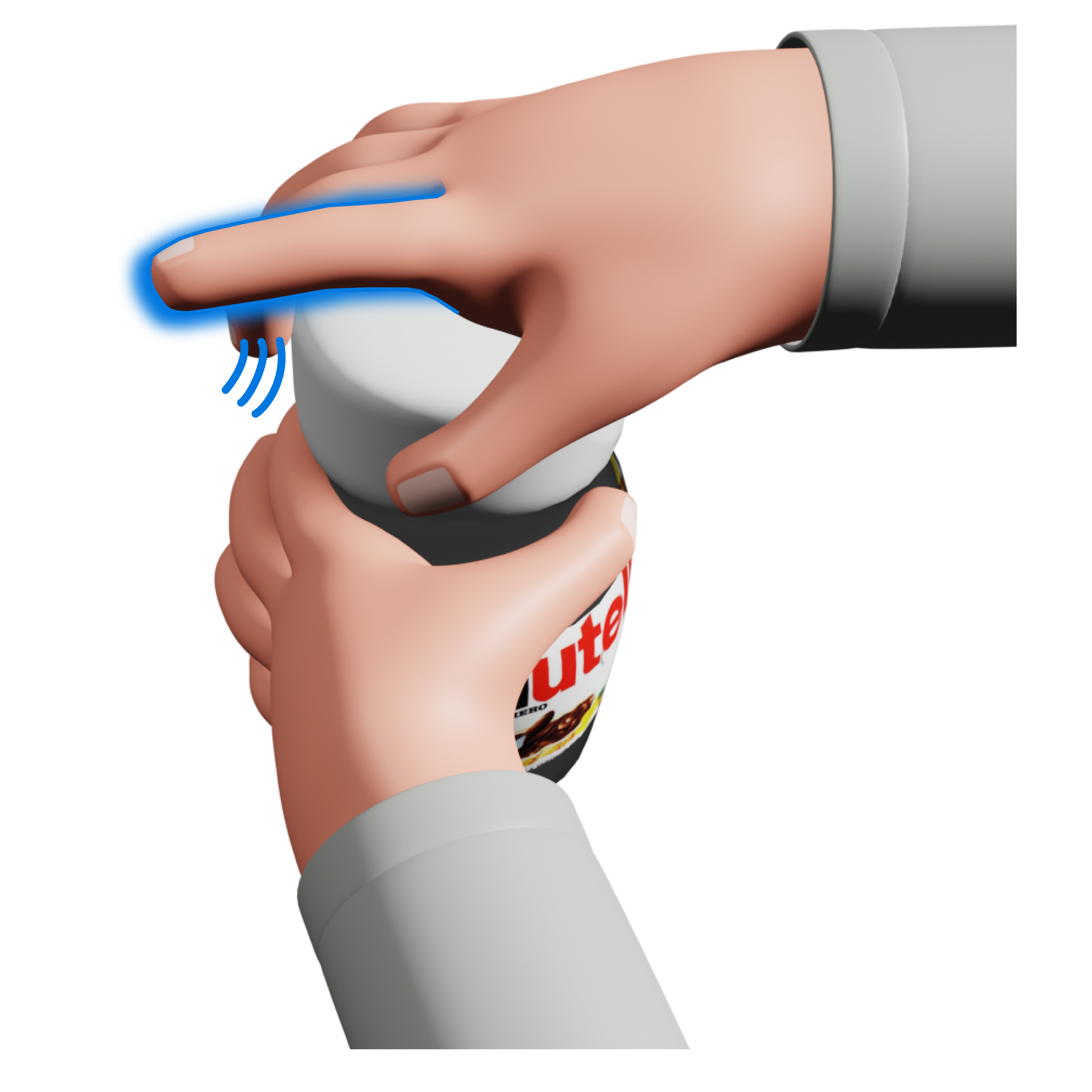

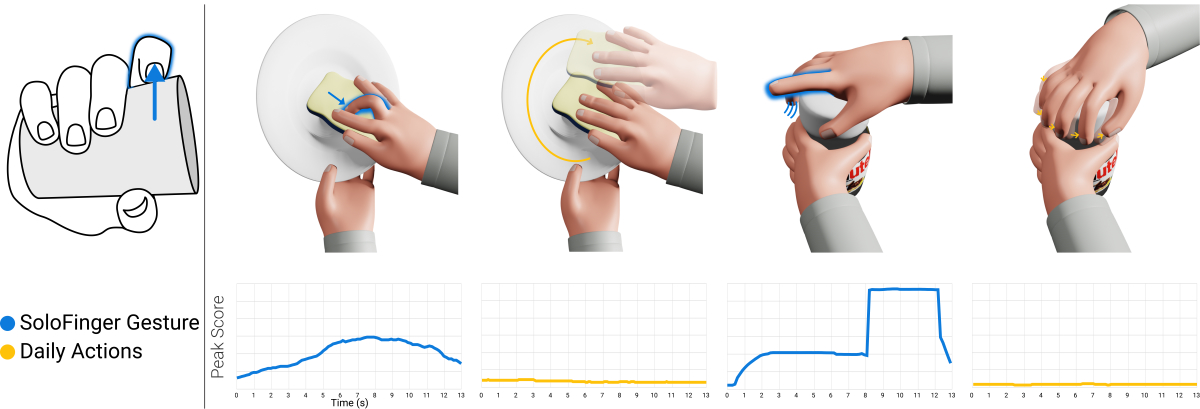

Using microgestures, prior work has successfully enabled gestural interactions while holding objects. Yet, these existing methods are prone to false activations caused by natural finger movements while holding or manipulating the object. We address this issue with SoloFinger, a novel concept that allows design of microgestures that are robust against movements that naturally occur during primary activities. Using a data-driven approach, we establish that single-finger movements are rare in everyday hand-object actions and infer a single-finger input technique resilient to false activation. We demonstrate this concept’s robustness using a white-box classifier on a pre-existing dataset comprising 36 everyday hand-object actions. Our findings validate that simple SoloFinger gestures can relieve the need for complex finger configurations or delimiting gestures and that SoloFinger is applicable to diverse hand-object actions. Finally, we demonstrate SoloFinger’s high performance on commodity hardware using random forest classification.

As mentioned in the paper, the dataset of SoloFinger is available for download. It can only be used for scientific and/or non-commercial purposes.

If you use this dataset, you are required to cite the SoloFinger (CHI’21) paper. For more information on the dataset, please check out the attached README file.

Compressed Zip file with OptiTrack and VR Glove Dataset: SoloFinger_dataset.zip (104 mb)