Research

Our research mission is to contribute to a next generation of user interfaces that seamlessly merge with the physical world and the human body. These interfaces create more effective, expressive, and engaging interactions with interactive systems and devices. Moreover, they are compatible with challenging mobility contexts and integrate well with real-world activities. This opens up a wide range of applications in various fields, including mobile and wearable computing, robotics, smart home, health and fitness devices.

We develop user interface technologies for advanced sensing and displays, invent new concepts for interaction, and empirically study user behavior.

Our focus areas include:

- Soft, flexible and stretchable user interfaces

- Touch and multi-touch interactions

- Body-based user interfaces

- Wearable devices, interactive skin and electronic textiles

- Mobile computing

- Augmented Reality

- Haptics

- Human-Robot Interaction

- Digital fabrication and rapid prototyping

- New materials for interaction

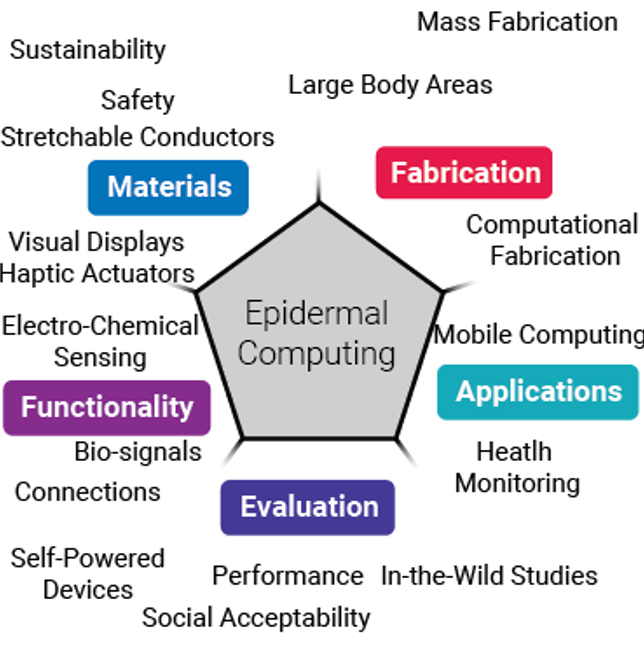

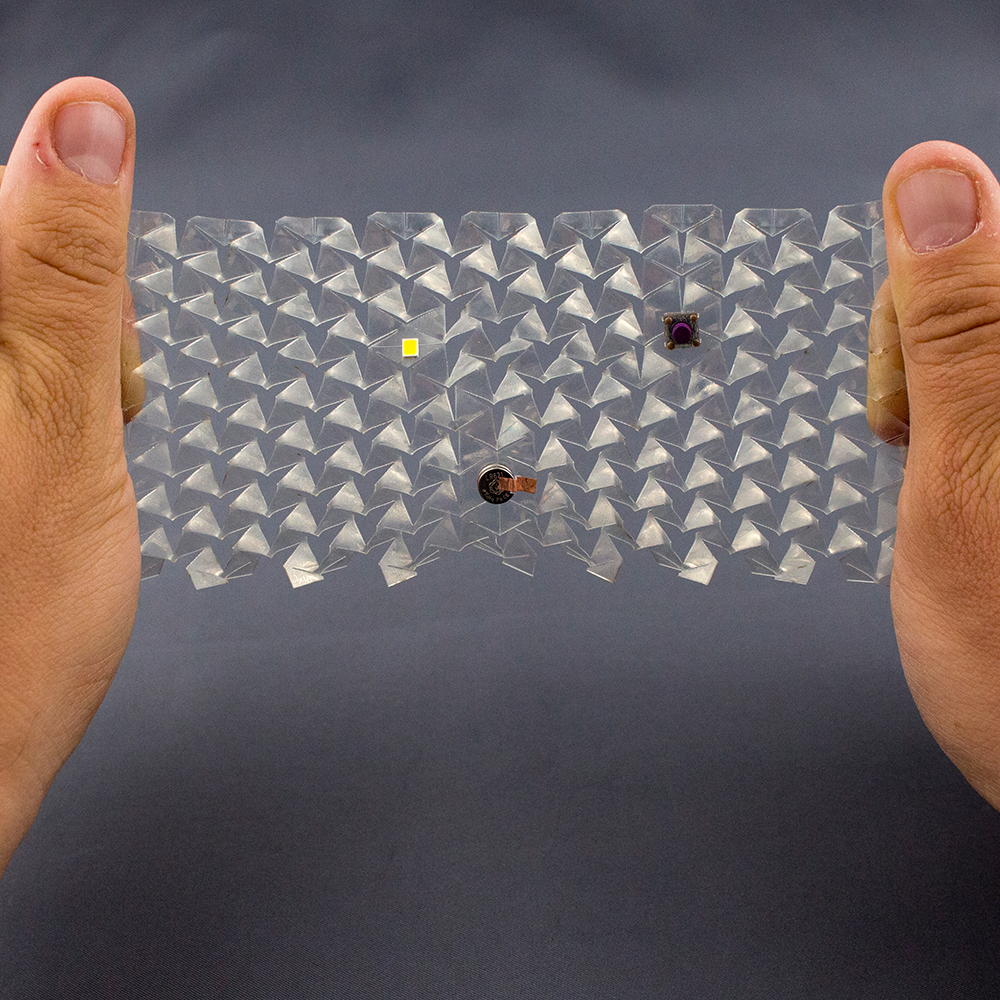

Next Steps in Epidermal Computing

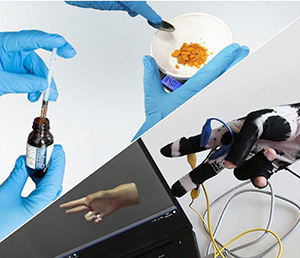

Skin is a promising interaction medium and has been widely explored for mobile, and expressive interaction. Recent research in HCI has seen the development of Epidermal Computing Devices: ultra-thin and non-invasive devices which reside on the user’s skin, offering intimate integration with the curved surfaces of the body, while having physical and mechanical roperties that are akin to skin, expanding the horizon of on-body interaction.

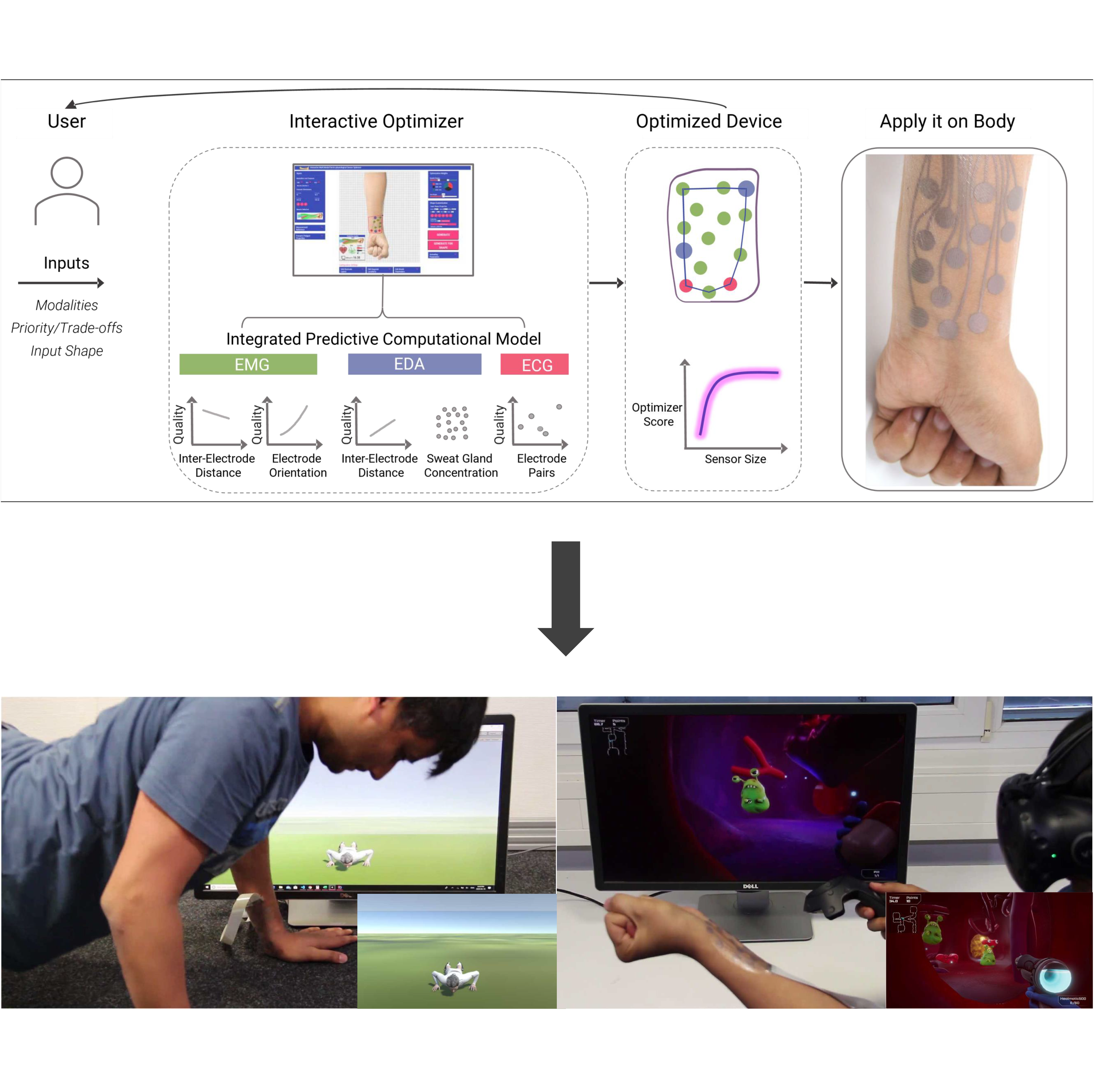

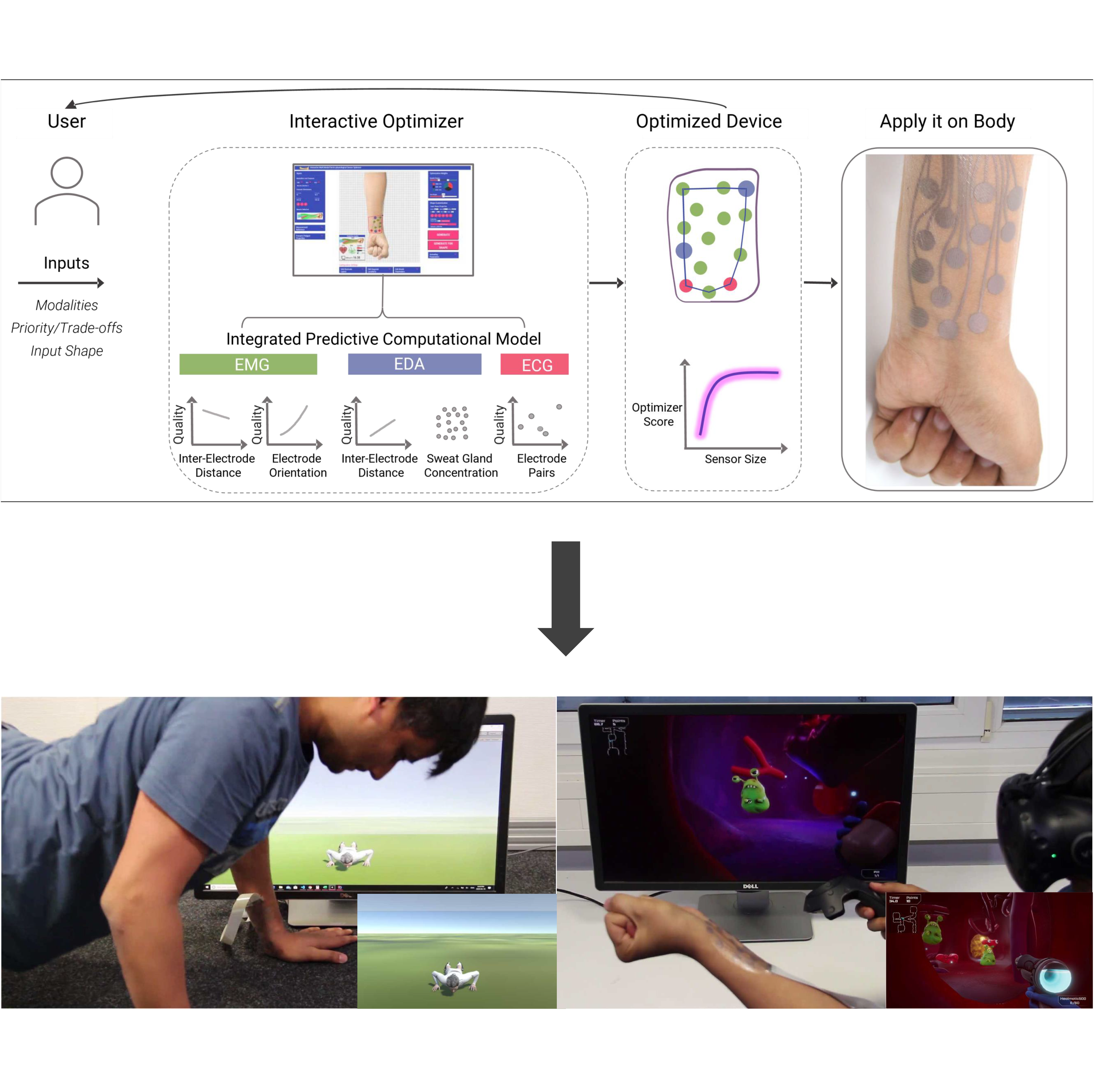

Computational Design And Optimization Of Physiological Sensors

Our computational design tool enables users to quickly design custom physiological sensing devices with few clicks. This offers a direct, fast, and user-friendly way of setting body dimensions, selecting the modalities the sensor will be able to capture (EMG, ECG, and/or EDA), and selecting specific muscles for EMG sensing. The sensing quality of one or multiple modalities can be easily prioritized by moving a slider. Similarly, the priority of a compact sensor vs. the highest possible sensing quality can be continuously adjusted.

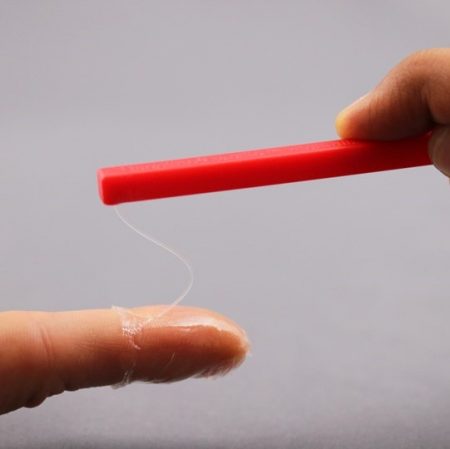

BodyStylus

BodyStylus presents the first computer-assisted approach for on-body design and fabrication of epidermal interfaces. BodyStylus proposes a hand-held tool that augments freehand inking with digital support.

PhysioSkin

PhysioSkin is a rapid fabrication technique for realizing skin-conformal physiological devices. PhysioSkin devices can measure EMG, EDA and ECG signals from the human body.

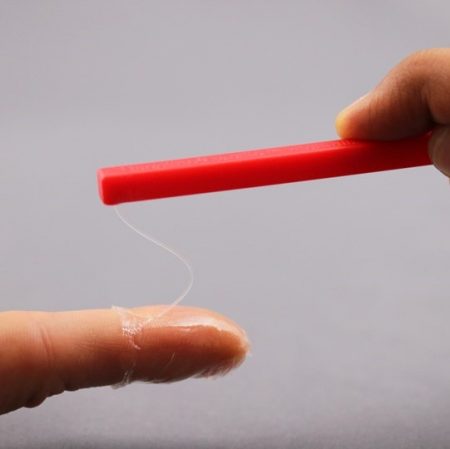

Like a Second Skin

Our work presents psycho-physical findings about how epidermal devices of various elasticity and thickness affect human tactile perception. Findings can guide the design and material selection of future epidermal devices.

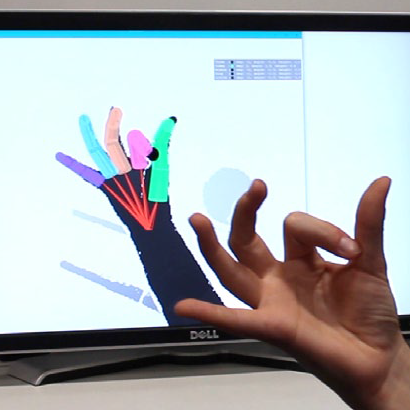

FingerInput

FingerInput is a thumb-to-finger gesture recognition system using depth sensing and convolutional neural networks. It is the first system that accurately detects the touchpoints between fingers as well as finger flexion.

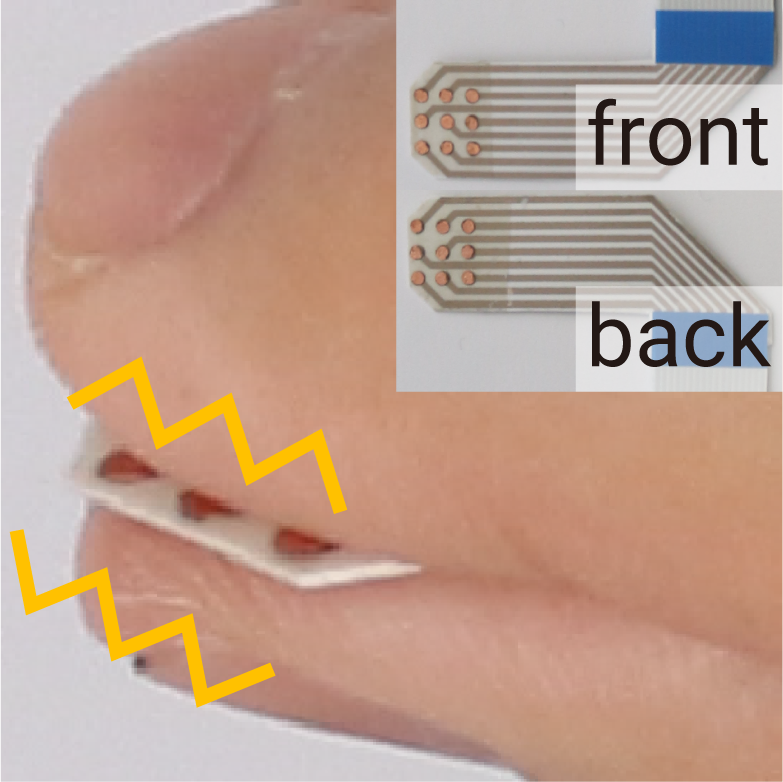

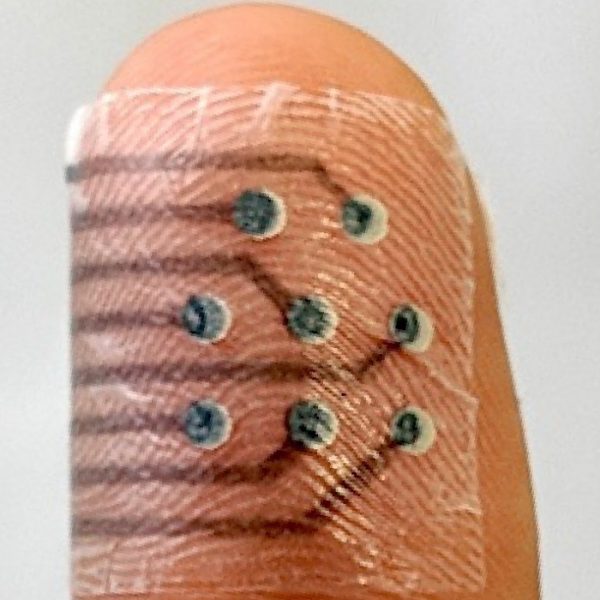

Tacttoo

Tacttoo is a feel-through interface for electro-tactile output on the user’s skin. At less than 35μm in thickness, it is the thinnest tactile interface for wearable computing to date.

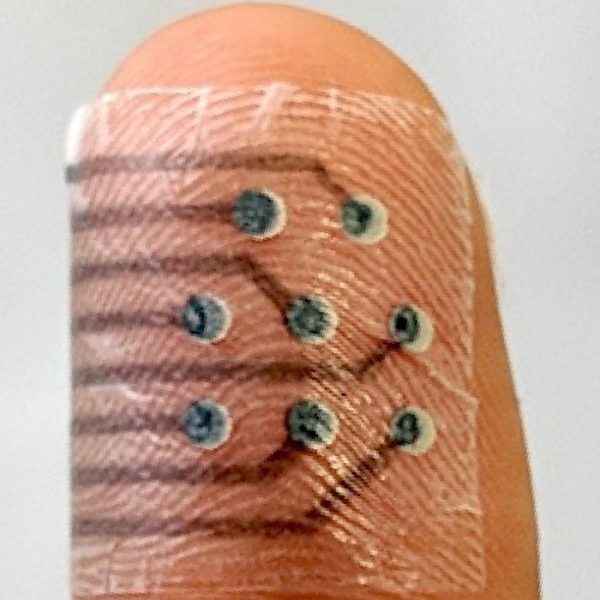

Multi-Touch Skin

Multi-Touch Skin are thin and flexible multi-touch sensors for on-skin input. They enable high-resolution multi-touch input on the body and can be customized in size and shape to fit various locations on the body.

SkinMarks

SkinMarks are thin and conformal skin electronics for on-body interaction. They enable precisely localized input and visual output on strongly curved and elastic body landmarks.

iSkin

We propose iSkin, a novel class of skin-worn sensors for touch input on the body. iSkin is a very thin sensor overlay, made of biocompatible materials, and is flexible and stretchable.

More Than Touch

An elicitation study on how people interact on skin for controlling mobile devices. Investigates skin-specific input modalities, gestures and their associated mental modals, and preferred input locations.

On-Body Displays

On-body displays leverage instant availability and human physiology for personal and shared information display.

Shaping Compliance

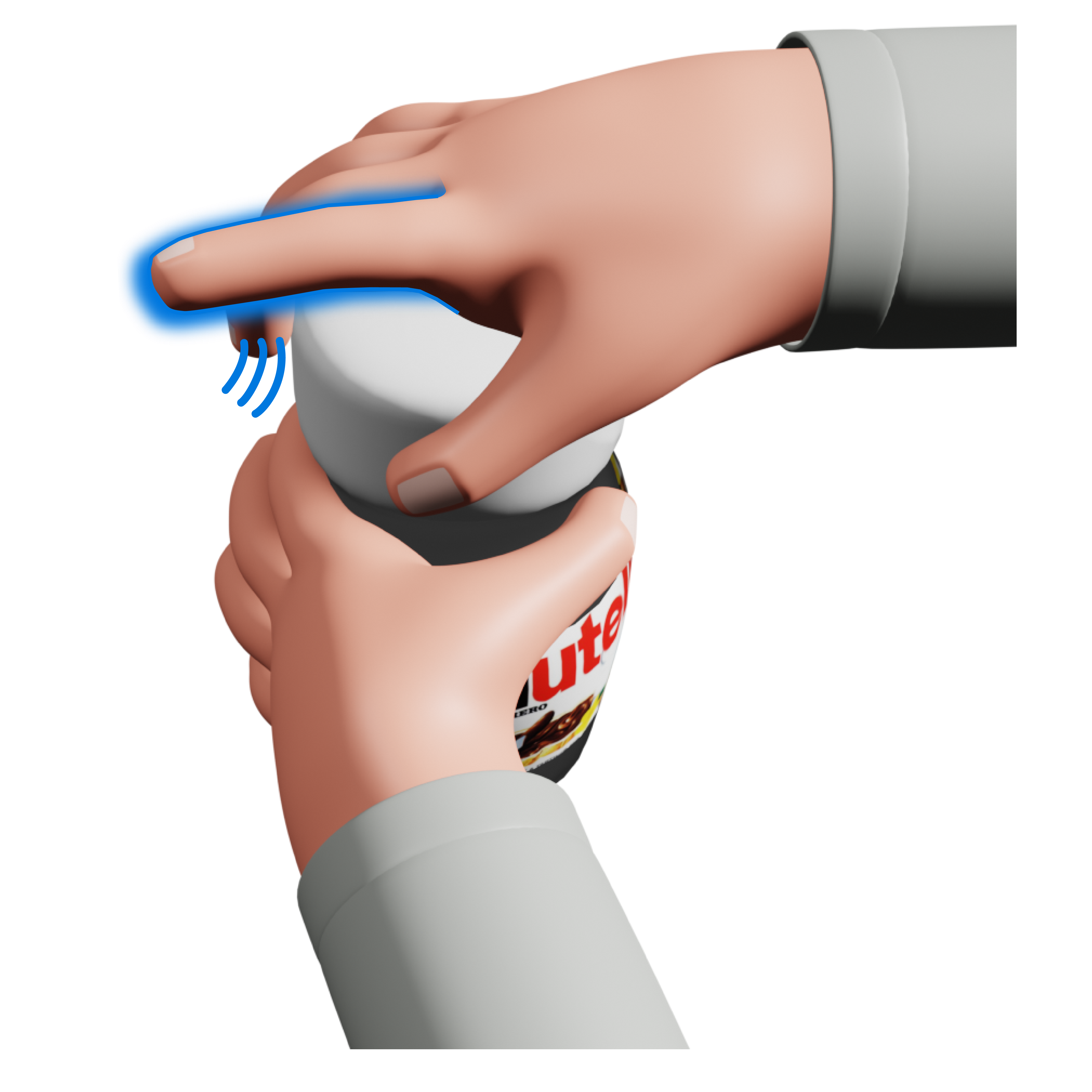

At CHI’24, We propose a novel electrotactile compliance illusion that renders grains of electrical pulses on an electrode array in response to finger force changes.

Double-Sided Tactile Interactions

At UIST’23, we propose a double-sided electrotactile device with a thin and fexible form factor to ft within pinched fngerpads, comprising two overlapping 3 × 3 electrode arrays.

Weirding Haptics

At UIST’21 we present a paper on rapid prototyping of vibrotactile feedback in virtual reality by leveraging the user’s voice.

Tactlets

We present a novel digital fabrication approach for printing custom, high-resolution controls for electro-tactile output with integrated touch sensing on interactive objects. We call these controls Tactlets.

Springlets

We introduce Springlets, expressive, non-vibrating mechanotactile interfaces on the skin. Embedded with shape memory alloy springs, we implement Springlets as thin and flexible stickers to be worn on various body locations.

Tacttoo

Tacttoo is a feel-through interface for electro-tactile output on the user’s skin. At less than 35μm, it is the thinnest tactile interface for wearable computing to date.

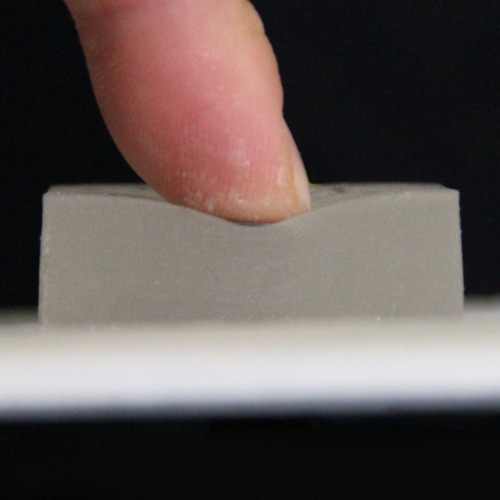

Squish This

We present a first systematic evaluation of the effects of compliance on force input. Results of a visual targeting task for three levels of softness indicate that high force levels appear more demanding for soft surfaces.

Like a Second Skin

Our work presents psycho-physical findings about how epidermal devices of various elasticity and thickness affect human tactile perception. Findings can guide the design and material selection of future epidermal devices.

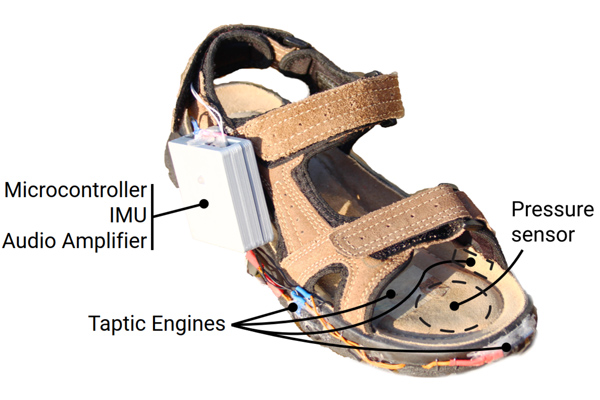

bAReFoot

bARefoot, is a prototype shoe providing tactile impulses tightly coupled to motor actions. This enables generating virtual material experiences such as compliance, elasticity, or friction.

3HANDS

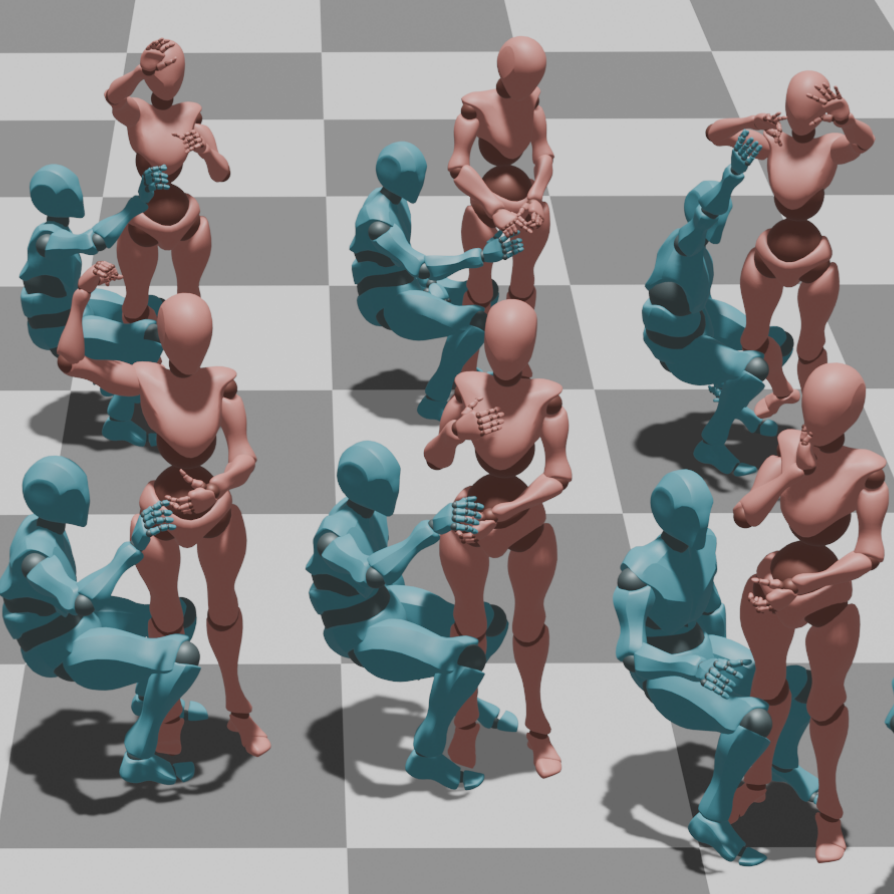

At CHI’25, we present 3HANDS, a novel dataset specific for data driven control of supernumerary robotic limbs (SRLs).

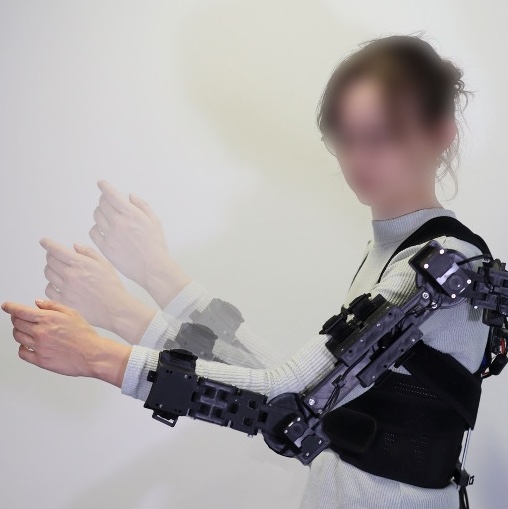

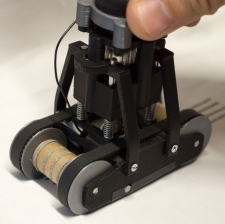

ExoKit

At CHI’25, we present ExoKit, a toolkit for rapid prototyping of interactions for arm-based exoskeletons without requiring robotics expertise.

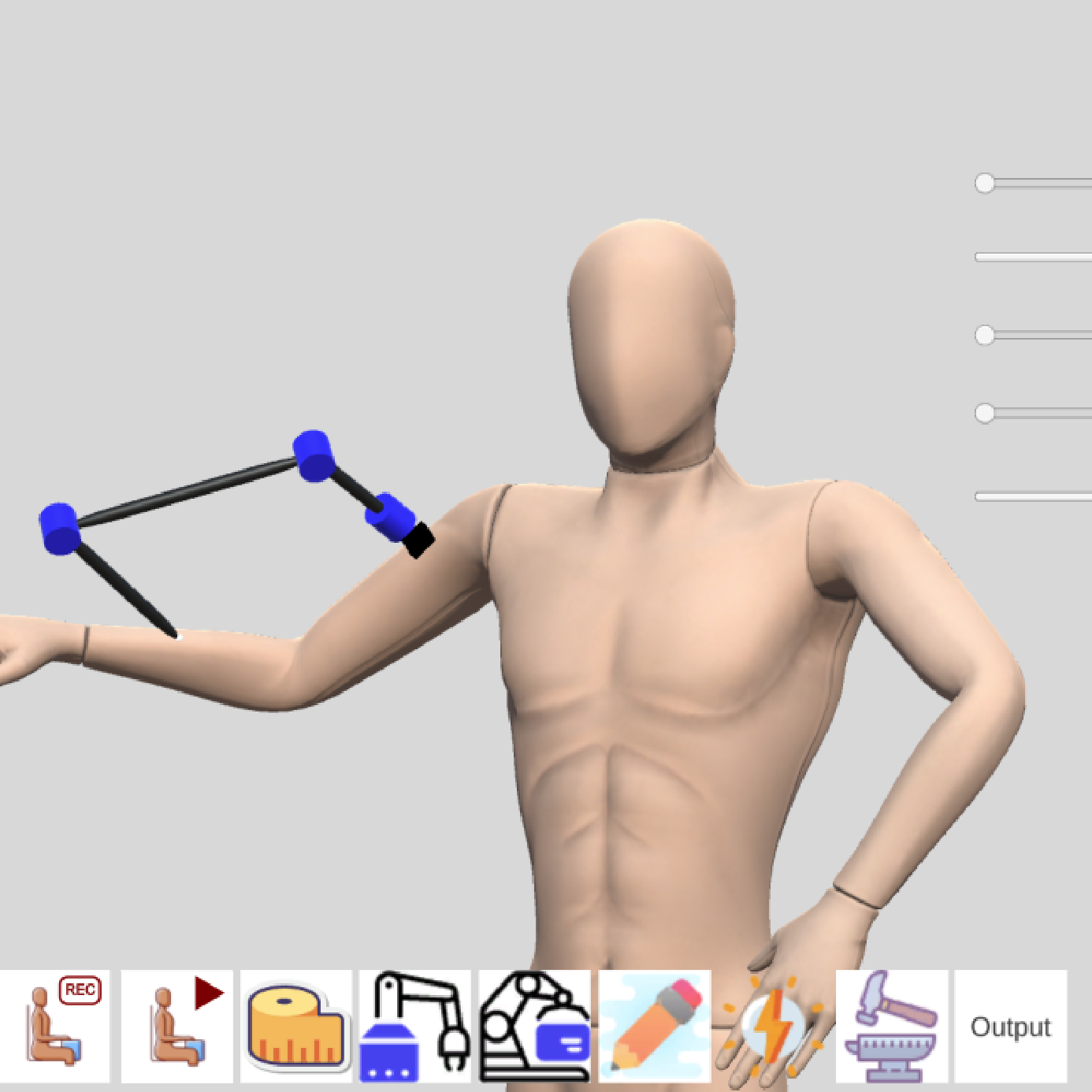

WRLKit

At UIST’23, we present WRLKit, an interactive computational design approach that enables designers to rapidly prototype a personalized WRL without requiring extensive robotics and ergonomics expertise.

I need a third arm

At CHI’23, we provide a comprehensive set of interactions for basic robot control, navigation, object manipulation, and emergency situations, performed when hands are free or occupied.

RoboSketch

RoboSketch is a robotic printer on wheels with a joystick controller for manual sketching, capable of creating large-scale, high-resolution prints.

EyeCam

Eyecam is an anthropomorphic webcam mimicking a human eye through which we challenge conventional relationships with ubiquitous sensing devices and call to re-think how sensing devices might appear and behave.

jamSheets

This works introduces layer jamming as an enabling technology for designing deformable, stiffness-tunable, thin sheet interfaces.

3HANDS

At CHI’25, we present 3HANDS, a novel dataset specific for data driven control of supernumerary robotic limbs (SRLs).

CreepyCoCreator

At CHI’25, we present CreepyCoCreator, a co-creative 3D object-building process, focusing on how AI can effectively convey its intent to users during object customization in Virtual Reality.

Interaction Design with Generative AI

Generative Artificial Intelligence (Generative AI) holds significant promise in reshaping interactive systems design, yet its potential across the four key phases of human-centered design remains underexplored. This article addresses this gap by investigating how Generative AI contributes to requirements elicitation, conceptual design, physical design, and evaluation.

GestureCoach

This paper introduces GestureCoach, a system designed to help speakers deliver more engaging talks by guiding them to gesture effectively during rehearsal. GestureCoach combines an LLM-driven gesture recommendation model with a rehearsal interface that proactively cues speakers to gesture appropriately.

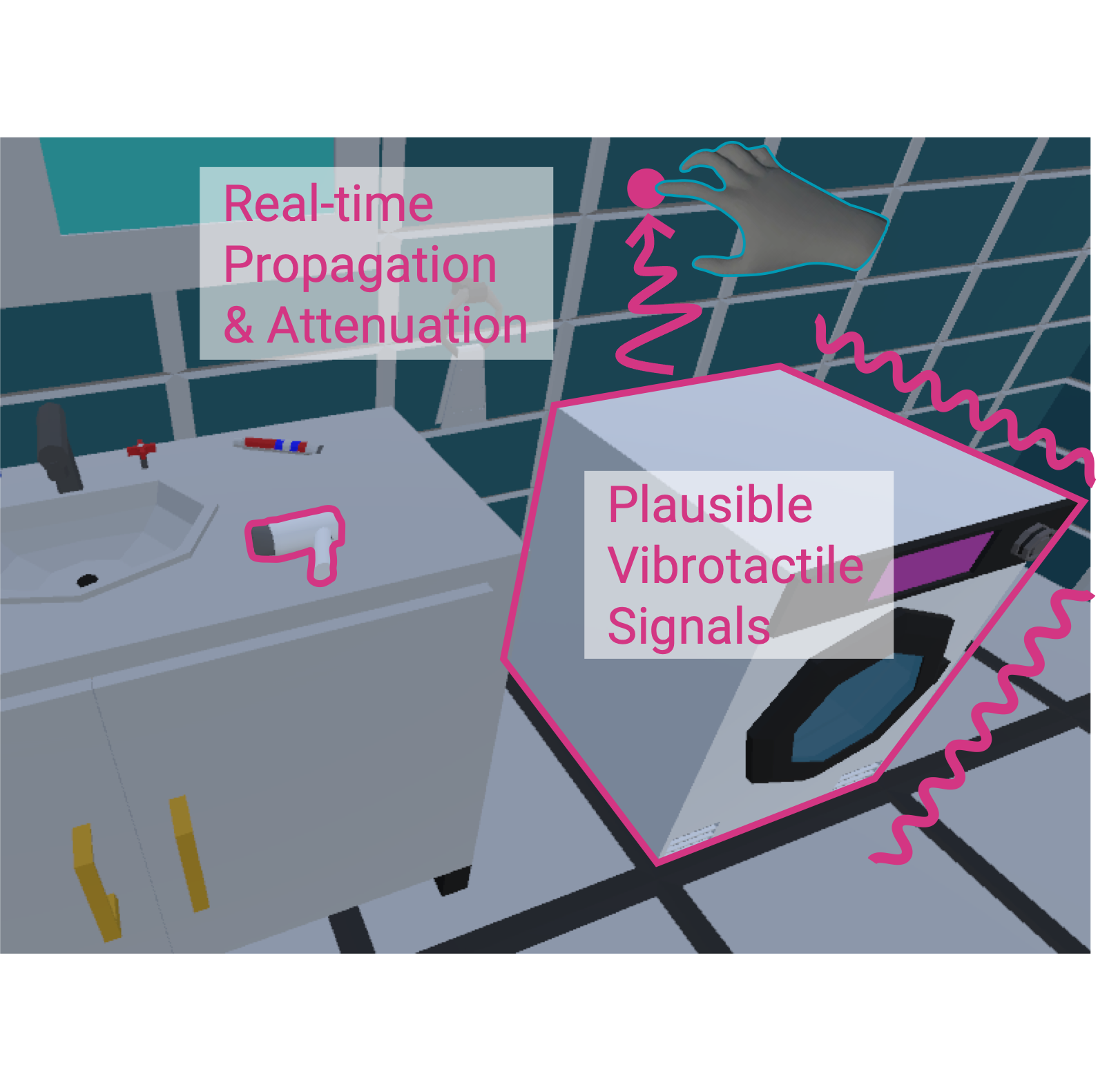

Scene2Hap

This paper introduces Scene2Hap, an LLM-centered system that automatically designs object-level vibrotactile feedback for the entire VR scene.

Computational Design And Optimization Of Physiological Sensors

Our computational design tool enables users to quickly design custom physiological sensing devices with few clicks. This offers a direct, fast, and user-friendly way of setting body dimensions, selecting the modalities the sensor will be able to capture (EMG, ECG, and/or EDA), and selecting specific muscles for EMG sensing. The sensing quality of one or multiple modalities can be easily prioritized by moving a slider. Similarly, the priority of a compact sensor vs. the highest possible sensing quality can be continuously adjusted.

SoftBioMorph

SoftBioMorph is a fabrication framework that aims to integrate the fabrication know-how of sustainable soft shape-changing interfaces with biopolymers.

Biohybrid Devices

Biohybrid devices is a set of novel fabrication techniques for embedding conductive elements, sensors, and output components through biological (e.g. bio-fabrication and bio-assembling) and digital processes.

Print-A-Sketch

Print-A-Sketch is an open-source handheld printer prototype for sketching circuits and sensors. It combines desirable properties from free-hand sketching and functional electronic printing.

Interactive Bioplastics

A DIY approach for composing soft interactive devices from bio-based and bio-degradable materials.

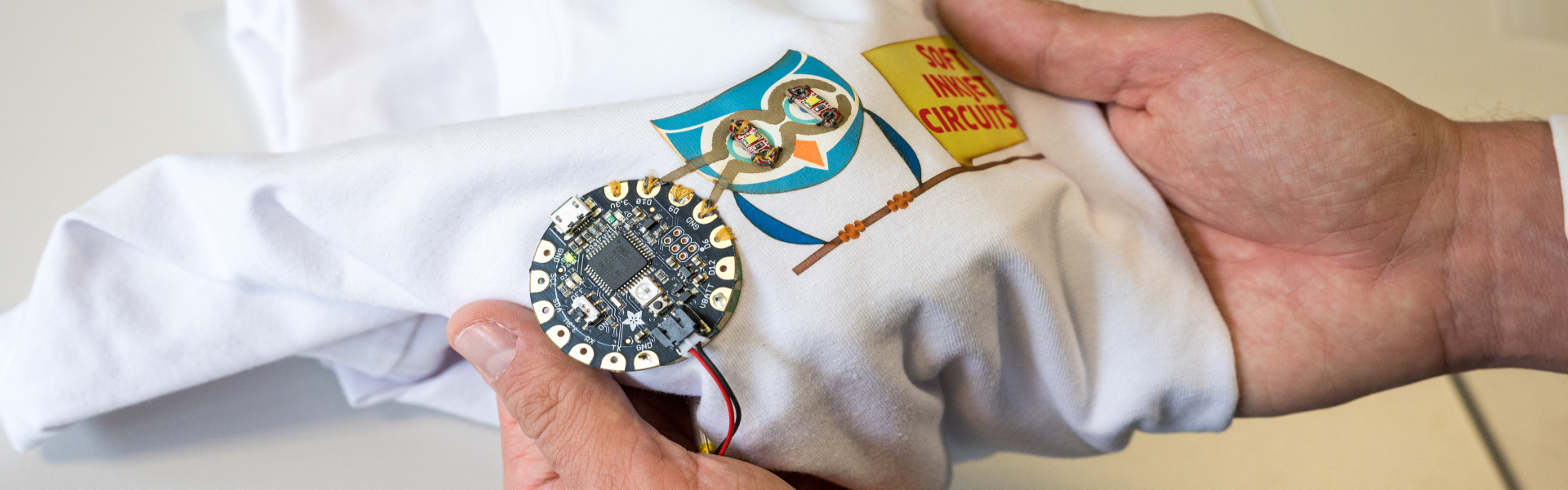

Soft Inkjet Circuits

We introduce multi-ink functional printing on a desktop printer for realizing multi-material devices. This enables circuits on a wide set of materials including temporary tattoo paper, textiles, and thermoplastic.

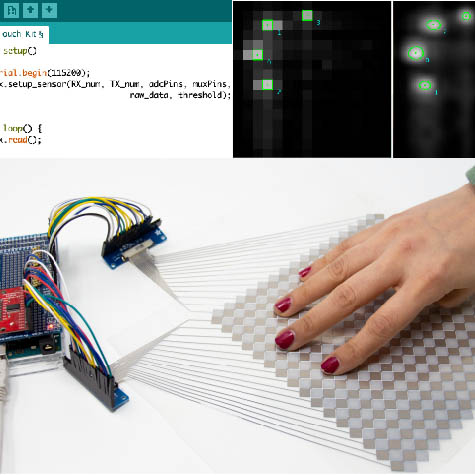

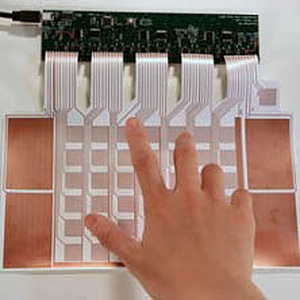

Multi-Touch Kit

Multi-Touch Kit enables electronics novices to rapidly prototype customized capacitive multitouch sensors with a commodity microcontroller and open-source software and does not require any specialized hardware.

LASEC

LASEC enables instant do-it-yourself fabrication of circuits with custom stretchability. A new one-step laser ablation-and-cutting process creates circuitry and desired stretchability on a conventional laser cutter.

Multi-Touch Skin

Multi-Touch Skin are thin and flexible multi-touch sensors for on-skin input. They enable high-resolution multi-touch input on the body and can be customized in size and shape to fit various locations on the body.

ObjectSkin

ObjectSkin is a fabrication technique for adding conformal interactive surfaces to everyday objects. It enables multi-touch sensing and display output that seamlessly integrates with highly curved and irregular geometries.

Foldio

Foldio is a new design and fabrication approach for custom interactive objects. The user defines a 3D model and assigns interactive controls; a fold layout containing printable electronics is auto-generated.

PrintScreen

PrintScreen is an enabling technology for digital fabrication of customized flexible displays using thin-film electroluminescence (TFEL).

A Cuttable Multi-touch Sensor

In this project, we propose cutting as a novel paradigm for ad-hoc customization of printed electronic components. We contribute a printed capacitive multi-touch sensor, which can be cut by the end-user to modify its size and shape.

PrintSense

A multimodal on-surface and near-surface sensing technique for planar, curved and flexible surfaces.

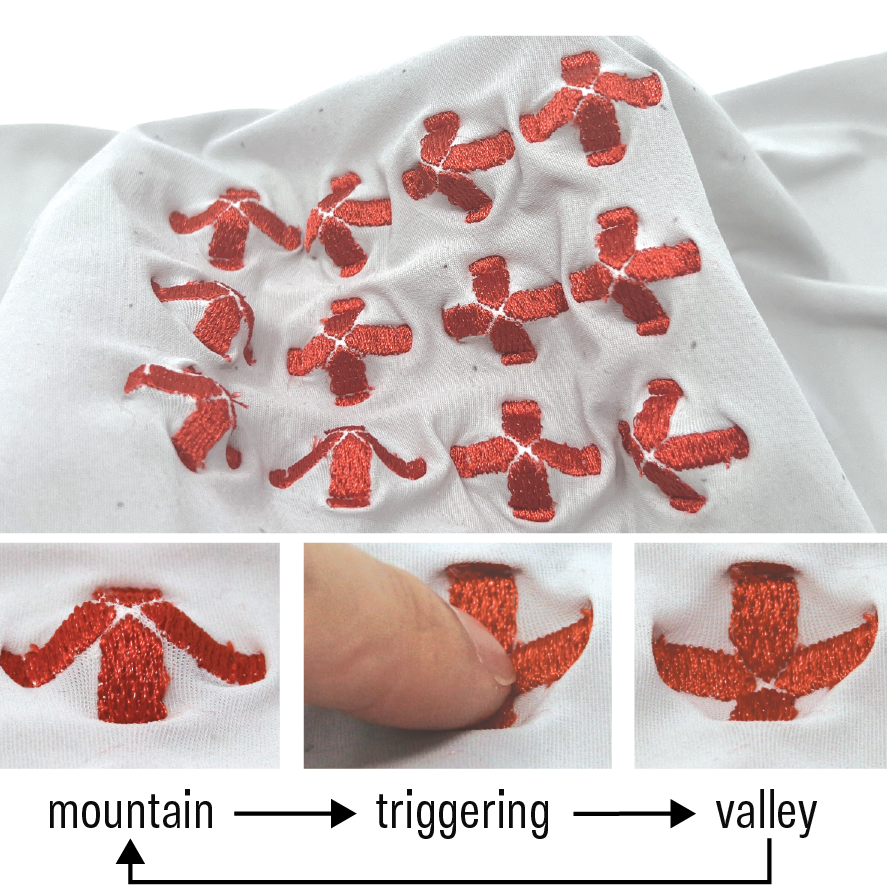

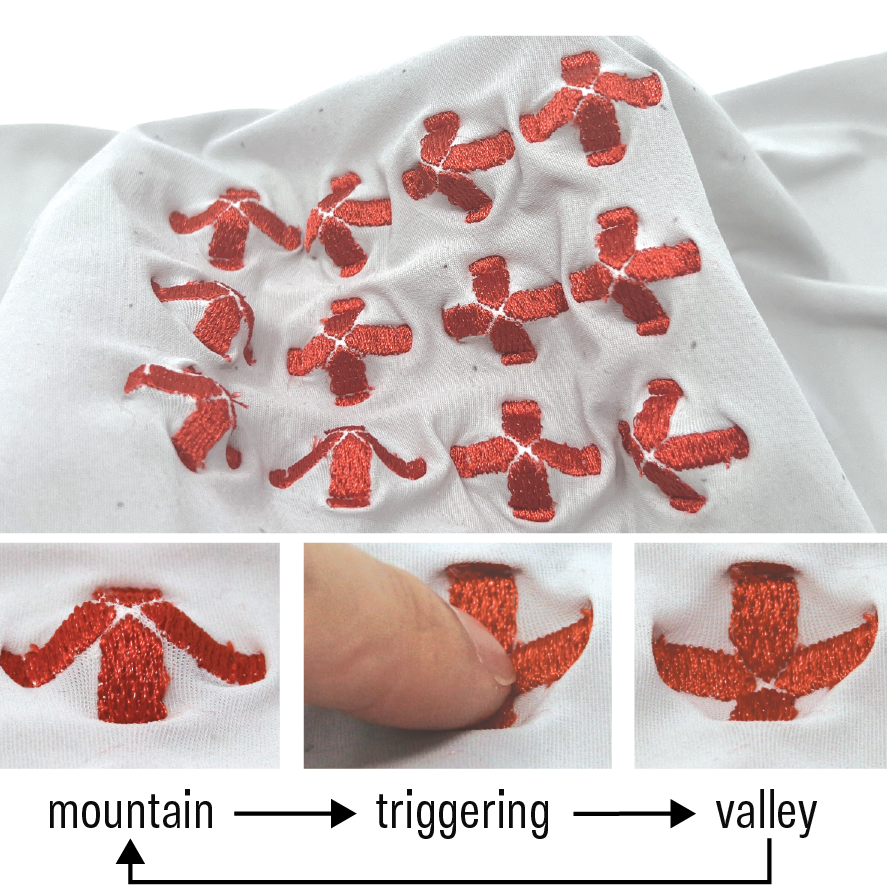

Embrogami

Embrogami is a machine-embroidery technique inspired by origami that enables shape-changing, interactive textiles with customizable structures and behaviors, allowing for flexible, user- or system-actuated textile interfaces.

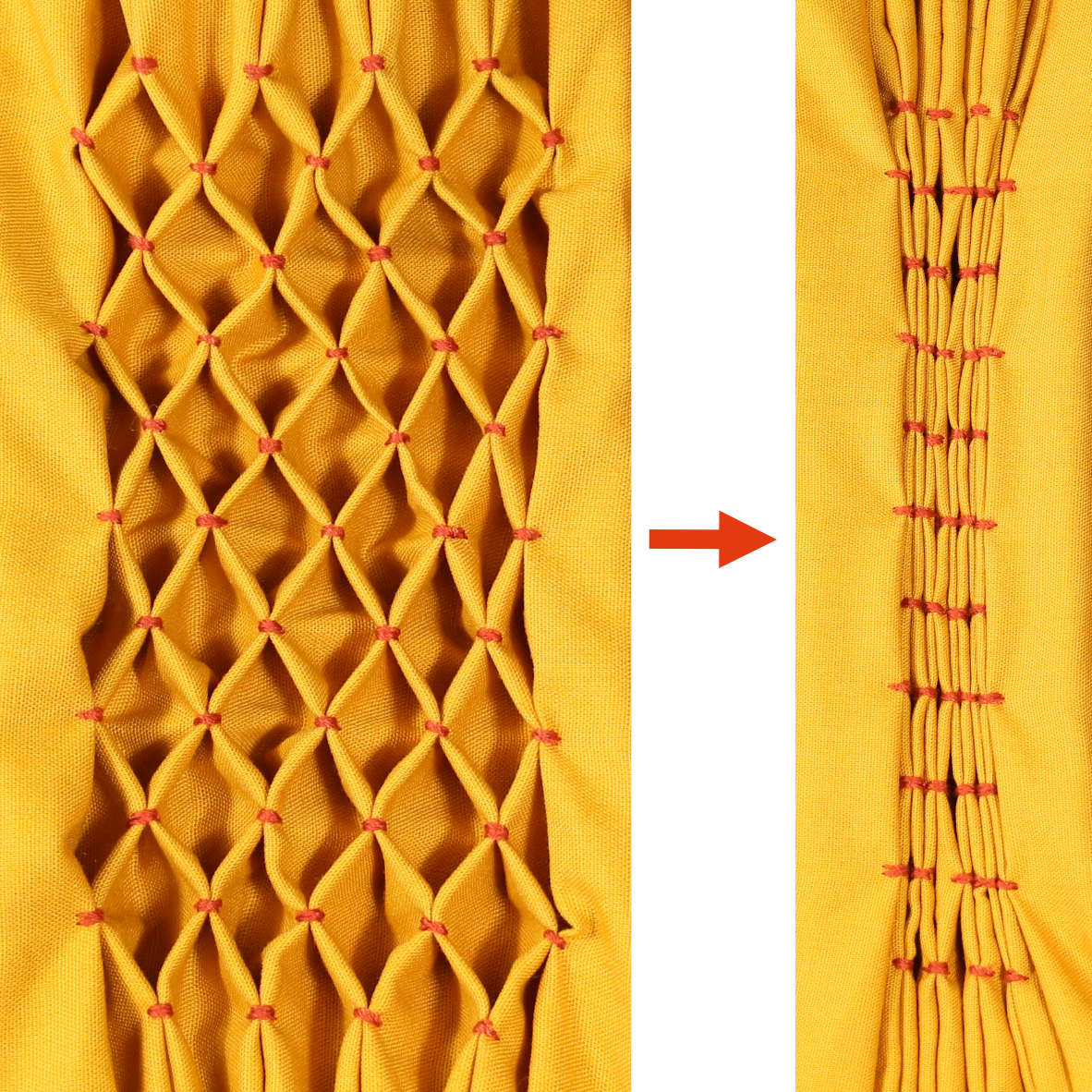

FlexTiles

Shape Memory Alloys (SMAs) afford the seamless integration of shape-changing behaviour into textiles, enabling designers to augment apparel with dynamic shaping and styling.

ClothTiles

ClothTiles presents a prototyping platform to fabricate actuators of clothing. ClothTiles leverage flexible 3D-printing and Shape-Memory Alloys (SMAs) alongside new parametric actuation designs.

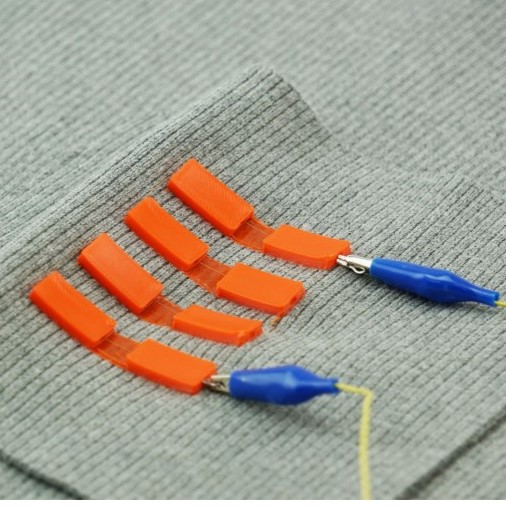

PolySense

We present a method for enabling arbitrary textiles to sense pressure and deformation: In-situ polymerization supports the integration of piezo-resistive properties at the material level, preserving a textile’s haptic and mechanical characteristics.

Rapid Iron-On User Interfaces

Rapid Iron-On User Interfaces support rapid fabrication in a sketching-like fashion, through handheld dispenser tool for directly applying continuous functional tapes of desired length.

SmartSleeve

SmartSleeve, is a deformable textile sensor, which can sense both surface and deformation gestures in real-time. It expands the gesture vocabulary using advanced deformation gestures, such as, Twirl, Twist, Fold, Push and Stretch.

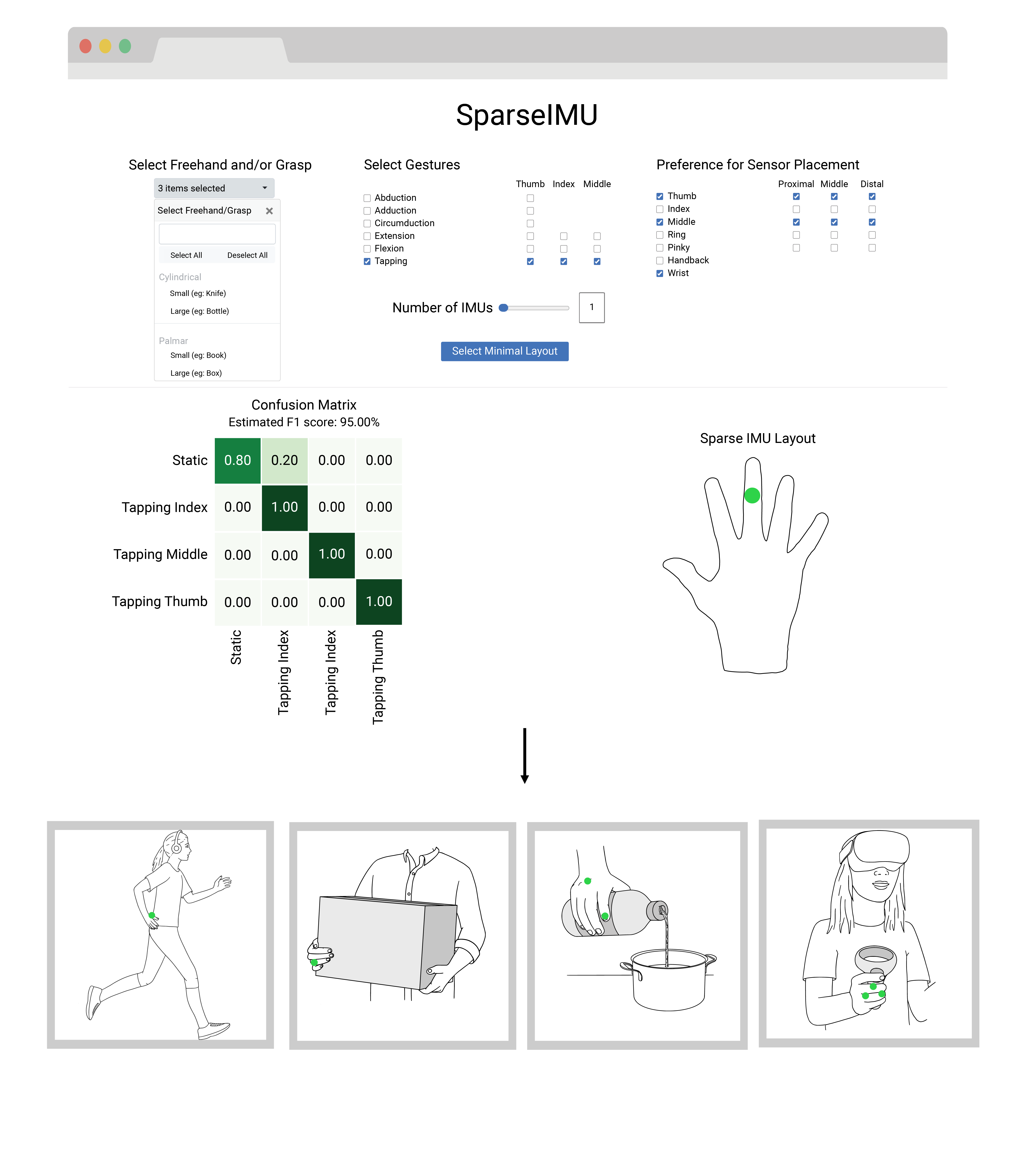

SparseIMU

Computational design approach to recognize the desired set of freehand and/or grasping microgestures with minimal hand instrumentation. We also performed a series of analyses, including an evaluation of the entire combinatorial space (393K sensor layouts) and quantified the performance across different layout choices.

SoloFinger

SoloFinger is a concept addressing the problem of false activation while grasping everyday objects. Through extensive user-studies and a series of data-driven analyses we show that SoloFinger microgestures are rapid, easy-to-perform and robust.

Grasping Microgestures

Our work informs gestural interaction with computer systems while hands are busy holding everyday objects. We present results from the first empirical analysis of over 2,400 microgestures performed by end-users.

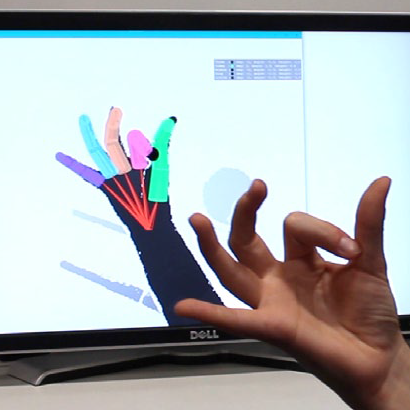

FingerInput

FingerInput is a thumb-to-finger gesture recognition system using depth sensing and convolutional neural networks. It is the first system that accurately detects the touch points between fingers as well as the finger flexion.

DeformWear

DeformWear are tiny wearable devices that leverage single-point deformation input on various body locations. This enables expressive and precise input using high-resolution pressure, shear, and pinch deformations.

Tactlets

We present a novel digital fabrication approach for printing custom, high-resolution controls for electro-tactile output with integrated touch sensing on interactive objects. We call these controls Tactlets.

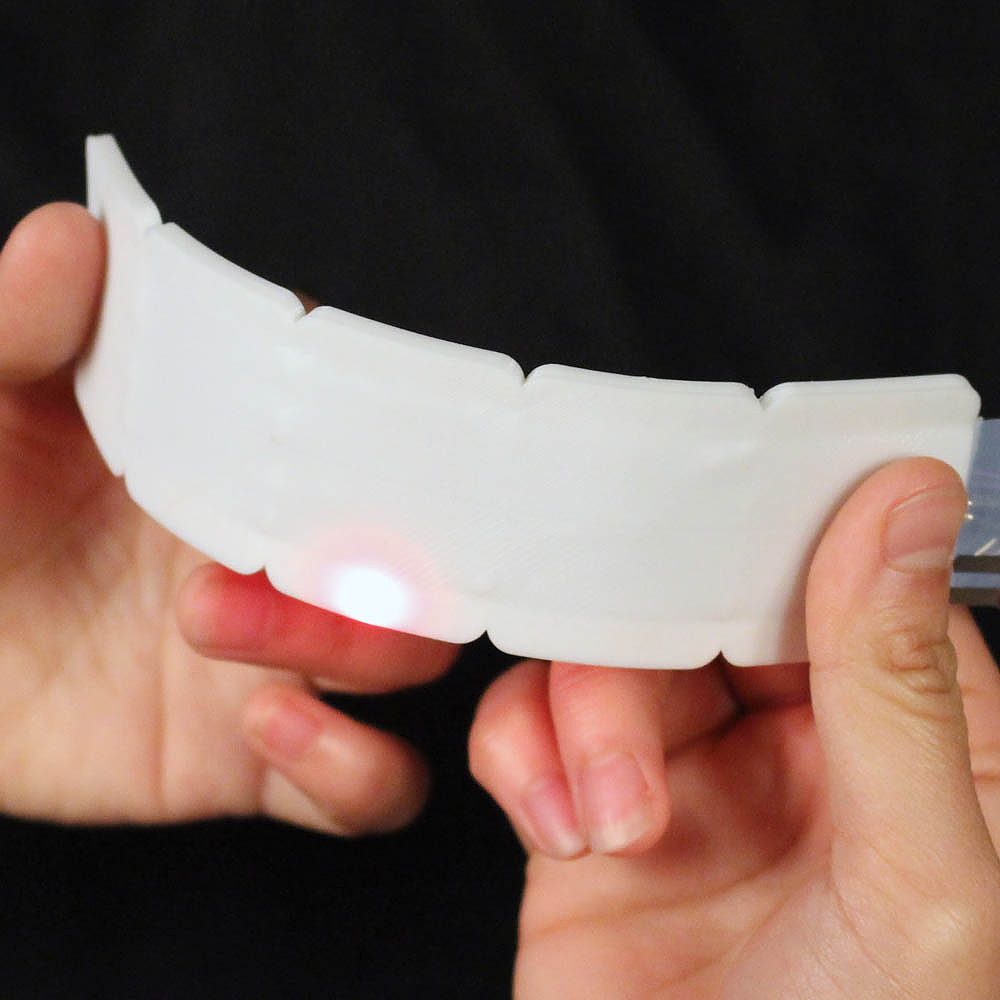

Flexibles

Flexibles add expressive deformation input to interaction with on-screen tangibles. Based on different types of deformation mapping, Flexibles can capture pressing, squeezing, and bending input with multiple levels of intensities.

HotFlex

HotFlex leverages printed embedded elements, capable of computer-controlled state change, to enable hands-on remodeling, personalization, and customization of a 3D-printed object after it is printed.

Capricate

Capricate, is a fabrication pipeline that enables users to easily design and 3D print highly customized objects that feature embedded capacitive multi-touch sensing.

Capacitive Touch Sensing on General 3D Surfaces

We propose a method to adapt capacitive multi-touch sensors to complex 3D surfaces, ensuring high-resolution, robust detection by computing a regular grid of electrodes, optimizing their layout, and minimizing the number of required controllers and connections.

Embrogami

Embrogami is a machine-embroidery technique inspired by origami that enables shape-changing, interactive textiles with customizable structures and behaviors, allowing for flexible, user- or system-actuated textile interfaces.

SoftBioMorph

SoftBioMorph is a fabrication framework that aims to integrate the fabrication know-how of sustainable soft shape-changing interfaces with biopolymers.

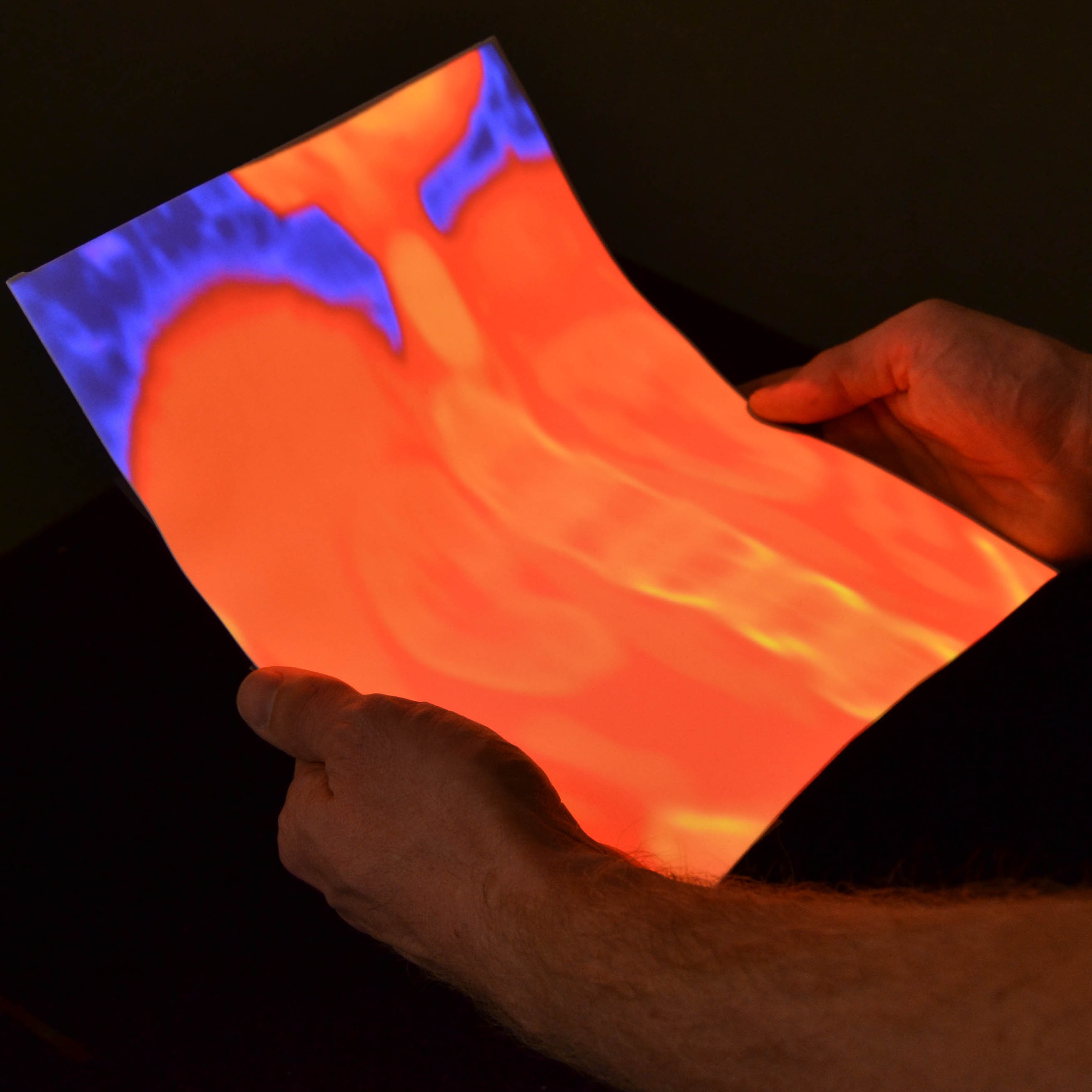

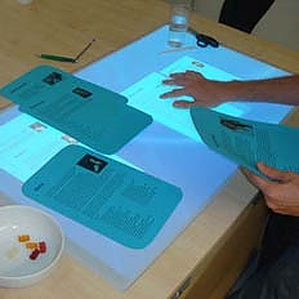

Flexpad

Flexpad is an interactive system that combines a depth camera and a projector to transform sheets of plain paper or foam into flexible, highly deformable and spatially aware handheld displays.

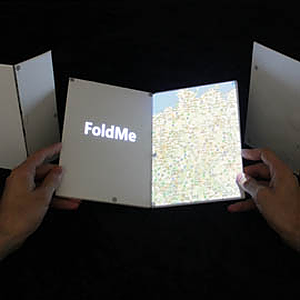

FoldMe

In this project, we present a novel device concept that features double-sided displays which can be folded using predefined hinges.

Xpaaand

Future generations of displays will be thin, lighweight, and flexible. In this project, we develop novel interaction techniques for displays which can be dynamically expanded and collapsed.

Collaborative Use of Rollable Display

Rollable Displays allow for creating tabletop sized displays on-the-go. In this project we investigate the collaborative use in the context of mobile face-to-face encounters.

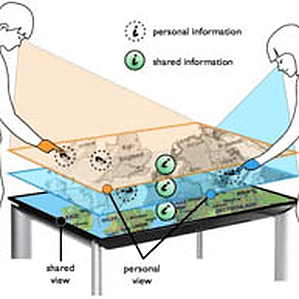

Occlusion-aware Tabletop

This project focuses on designing interaction techniques for hybrid tabletop displays, which enable users to concurrently manipulate digital and physical media on one single surface.

CloudDrops

CloudDrops is a pervasive awareness platform that integrates virtual information from the Web more closely with the contextually rich physical spaces in which we live and work.

PaperVideo

In this project, we investigated how interaction with video can benefit from paper-like displays that support interaction with motion and sound.

LightBeam

In this project, we investigate how the unique affordances of pico projectors can be leveraged for novel, tangible interaction techniques with physical, real-world objects.

Mobile Multimedia Interaction

We develop novel interaction techniques for the mobile navigation of multimedia content.

CoScribe

CoScribe is a collaborative platform for knowledge workers, which tightly integrates paper-based and digital documents. It offers novel interaction techniques for cross-media annotations, hyperlinks, and tags on both types of documents.

Letras

Letras provides a novel toolkit for the support of pen-based interaction in mobile and ubiquitous computing settings.

Our research projects receive funding from: European Research Council (ERC Starting Grant “InteractiveSkin”), Deutsche Forschungsgemeinschaft (DFG),Bosch Research, Google, and the State of Saarland.

Saarland University

Human-Computer Interaction Lab

Department of Computer Science

Campus E 1.7

66123 Saarbrücken

Germany

Vega Wordpress Theme by LyraThemes