Grasping Microgestures

02Body Interaction, Adwait

(CHI 2019)

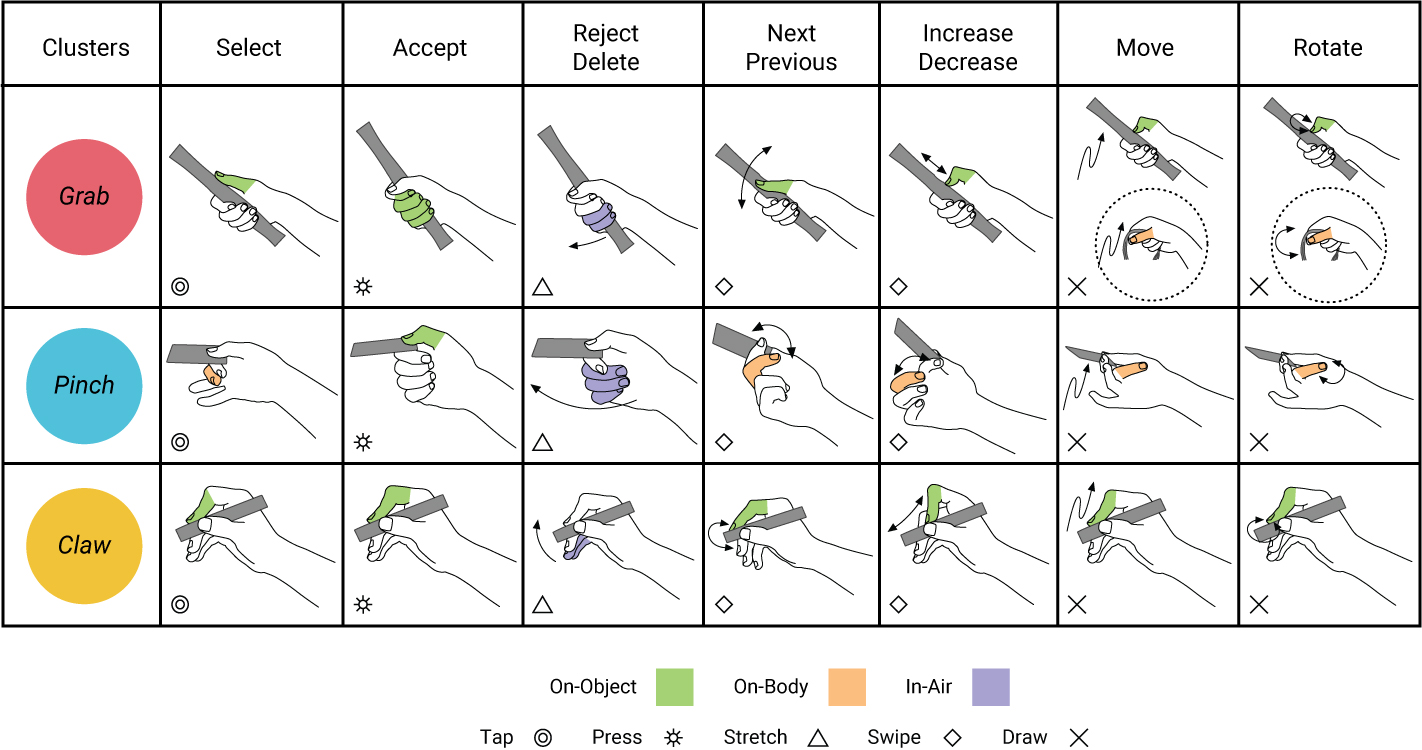

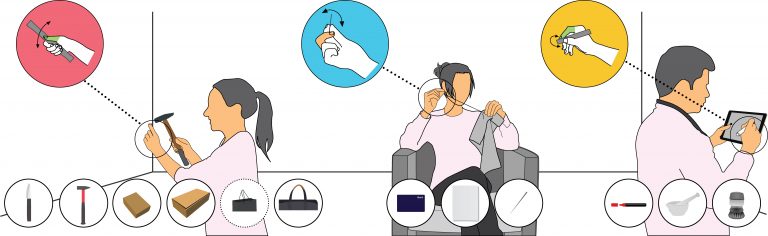

Our work informs gestural interaction with computer systems while hands are busy holding everyday objects. We present results from the first empirical analysis of over 2,400 microgestures performed by end-users. We call those gestures as Grasping Microgestures. Our key finding is to answer how grasps and object geometries affect the design space of microgestures performed on handheld objects in the light of the interactional constraints caused by holding a physical object in one’s hand. We characterize users’ preferred types of action when hands are busy and show that these actions mainly depend on the referent, rather than on the grasp or object. In contrast, the choice of fingers and action location is strongly influenced by the grasp and the size of the handheld object. We add to the existing elicitation method by proposing statistical clustering of users’ elicited gestures. This approach facilitates finding previously undiscovered patterns through a full data-driven interpretation.